|

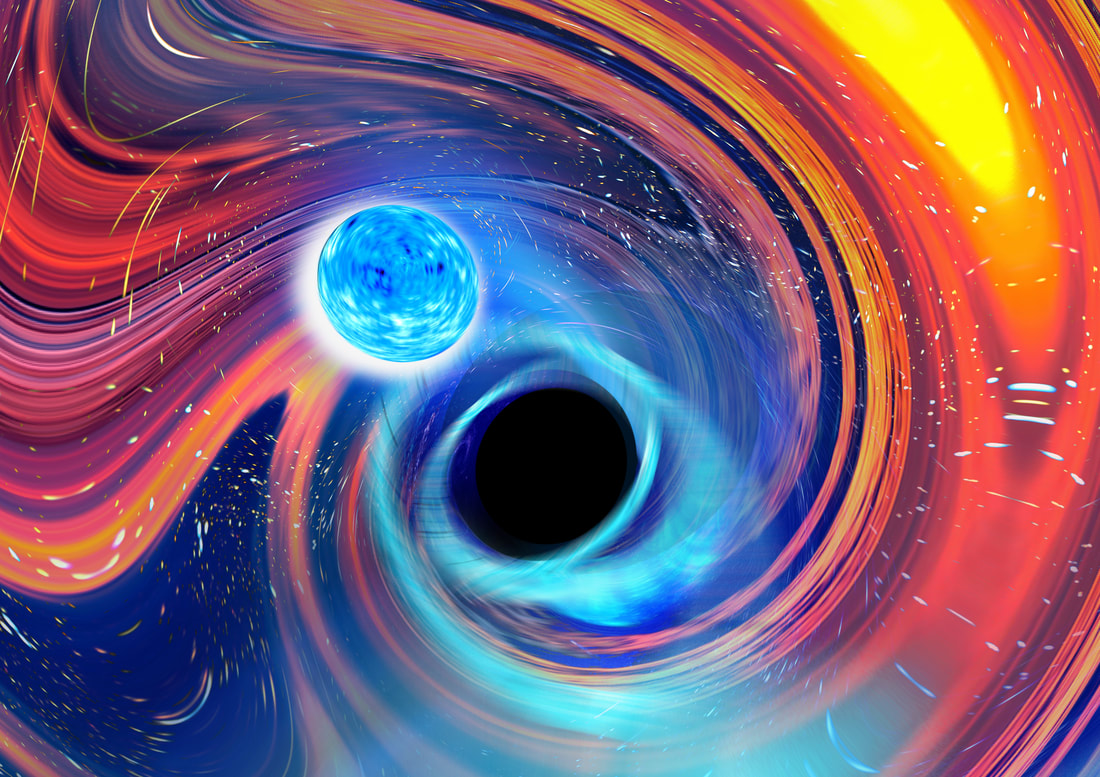

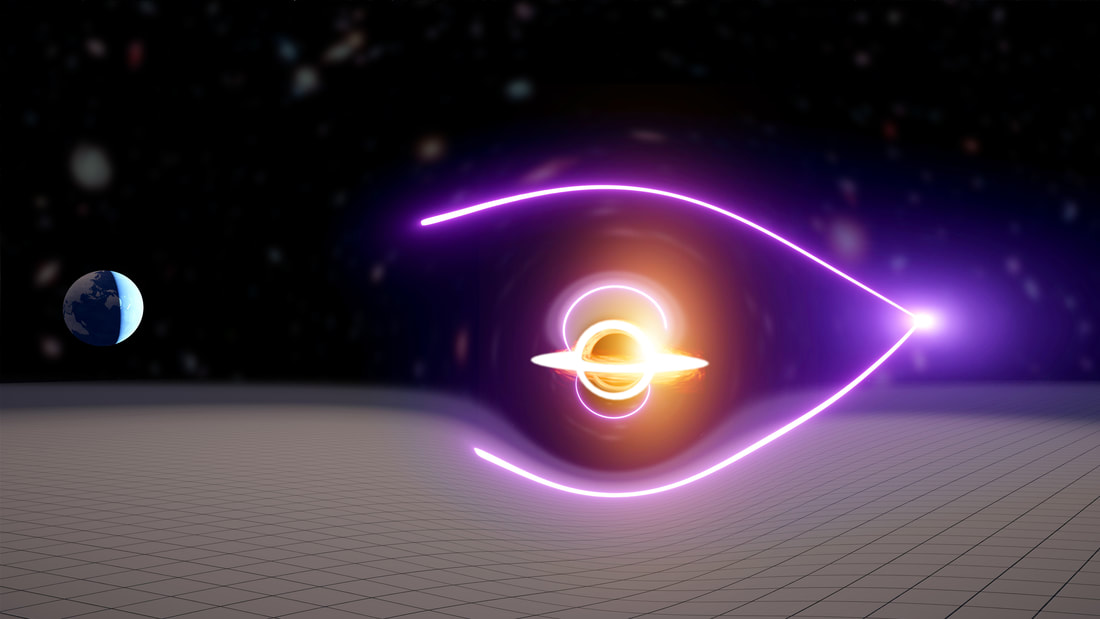

In our recently accepted paper, we examined the black hole-neutron star merger called GW200115, second observed by LIGO and Virgo in January 2020. Curiously, GW200115’s black hole could have been spinning rapidly, with its spin misaligned with respect to the orbital motion. This is strange because it implies that the system would have formed in pretty unexpected ways. So, is there something we’re missing? In our paper we show that the puzzling black hole spin is probably due to something that was added to the LIGO-Virgo measurements instead. It has to do with things called ‘priors’ which encode assumptions about the population of black hole-neutron star binaries based on our current knowledge. We argue that a better explanation for the GW200115 merger is that the black hole was not spinning at all, and consequently, we place tighter constraints on the black hole and neutron star masses. What is a prior? Imagine you want to know the probability of having drawn an Ace from a deck of cards, given that the card is red. You’d need to know the separate probabilities of drawing an Ace and a red card. The probability of drawing an Ace, independent of the data (“the card is red”) is the ‘prior’ probability of drawing an Ace. Astronomy is similar to a game of cards: we can think of observed gravitational-wave signals as having been dealt to us randomly by the Universe from a cosmic deck of cards. The prior should express our current best knowledge of this deck before we make a measurement, because it‘s used to calculate the probability of each possible black hole spin. In the LIGO-Virgo analysis of GW200115, it was assumed that all black hole spins are equally likely. This is fine if we have no strong preference for any value, but we do: observation and theory tell us we shouldn’t expect a rapidly spinning black hole to be paired with a neutron star. This information is key to accurately measuring the properties of GW200115. In our paper, we begin by demonstrating that if GW200115 originated from a black hole-neutron star binary with zero spin, the unrealistic LIGO-Virgo prior (which assumes the black hole can equally likely spin with any magnitude and direction) generates preference for a large misaligned black hole spin. We do this by simulating a gravitational-wave signal from a non-spinning binary, placing it into simulated (but realistic) LIGO-Virgo noise, and inferring its properties assuming any spin value is equally likely. Our simulated experiment yields a similar spin measurement to LIGO-Virgo’s and we’re able to explain analytically why signals from black hole-neutron star binaries with zero spin will generically yield such measurements when very broad spin priors are assumed. While this doesn’t prove that GW200115 is non-spinning, it suggests that the puzzling LIGO-Virgo spin measurement is probably due to their unrealistic priors. Next, we look to astrophysics to figure out a more realistic prior. We use current theoretical modelling to suggest that there’s roughly a 95% probability that black hole-neutron star binaries do not spin at all, and only around 5% do spin. We use this astrophysical prior to update the LIGO-Virgo measurements of GW200115’s spins and masses. When we do this, we find that there is almost zero probability that the black hole had any spin at all. While this might seem circular at first glance—after all, we’re giving zero-spin almost 20 times more weight than non-zero spin—it’s also a reflection of the fact that the data don’t strongly support a rapidly spinning black hole. Additionally, we show that our prior reduces the uncertainty on the black hole and neutron star masses by a factor of 3. Reassuringly, the mass of the neutron star looks significantly more like those found in double neutron star systems in the Milky Way. Written by Rory Smith and Ilya Mandel, Monash University

0 Comments

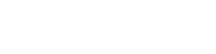

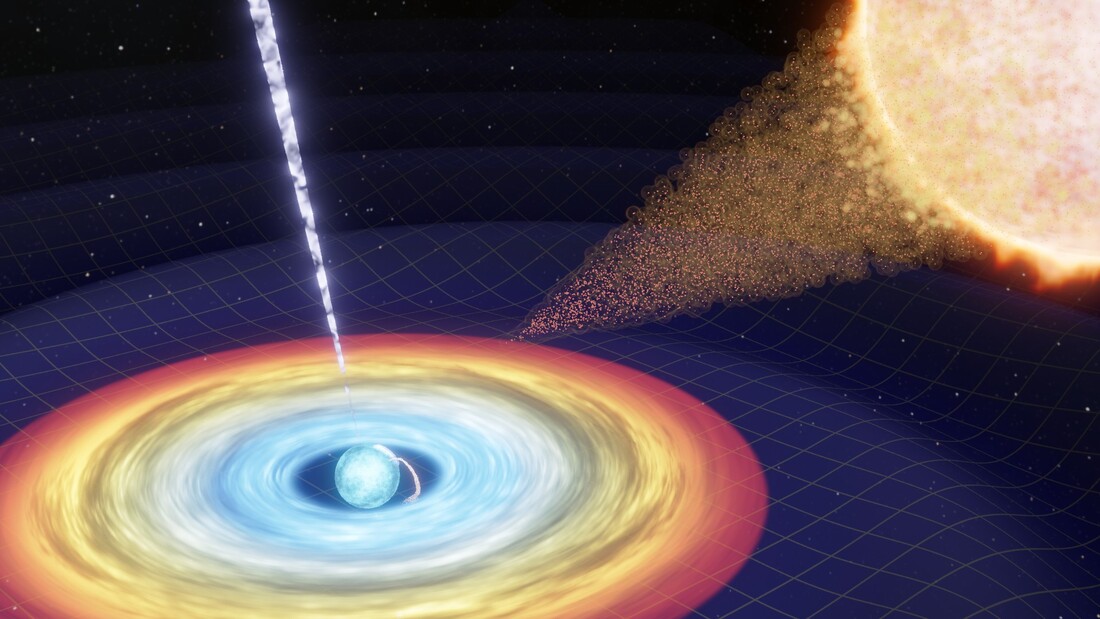

We measured the shapes of the orbits of dead stars by their *eccentricity*: higher eccentricity means the orbital shape is more squashed, while an eccentricity of 0 means that it is circular. The coloured shapes represent the probability of eccentricity for each event, with the widest point of the shape at the highest point of probability. There are two events with their highest point of probability above the detection threshold for eccentricity, which is indicated with a dotted line. The LIGO-Virgo-KAGRA Collaboration recently announced that the number of times we've seen dead stars crashing into each other on the other side of the Universe has grown to 90. It's clearly not uncommon for these dead stars—most of them black holes—to slam together in violent merger events. But one outstanding mystery pervades these detections: how do two compact stellar remnants find each other in the vast emptiness of space, and go on to merge together? In our recent paper, we found clues to solve this mystery from the orbital path shapes formed by the stellar objects before they collided. Often, stars are born into binary systems containing two stars that orbit each other. If these binary stars undergo specific evolutionary mechanisms, they can remain close when they die, and their corpses—black holes and/or neutron stars—can collide with each other. This kind of binary should trace a circular orbital path before it merges. However, sometimes stellar remnants meet in more exciting environments, like the cores of star clusters. In this kind of environment, binary stellar remnants can trace orbital paths around each other that look like ‘squashed’ circles—more egg-shaped or sausage-shaped. Dense clusters of stars can produce binaries in circular orbits; however, about 1 in 25 of the mergers that combine in a dense star cluster are expected to have orbital shapes that are visibly squashed. To map the paths taken by cosmic couples in their pre-merger moments, we studied the space-time ripples produced by the collisions of 36 binary black holes. Two of these collisions—one of them being the monster binary black hole GW190521—contained the distinctive signatures of elongated (squashed) orbits. This means that more than a quarter of the observed collisions may be occurring in dense star clusters, because every squashed-orbit system indicates that 24 more mergers may also have happened in this environment. While this result is exciting, it’s not conclusive: other dense environments, like the centres of galaxies, can also produce merging stellar remnants with squashed orbital shapes. To distinguish the formation habitats of the observed population, we need to scrutinise the orbital shapes of more colliding stellar remnants. Luckily, the number of detected stellar-remnant collisions is growing quickly, so this merger mystery may be solved soon. Written by OzGrav PhD student Isobel Romero-Shaw, Monash University Multimessenger astronomy is an emerging field which aims to study astronomical objects using different ‘messengers’ or sources, like electromagnetic radiation (light), neutrinos and gravitational waves. This field gained enormous recognition after the joint detection of gravitational waves and gamma ray bursts in 2017. Gravitational waves can be used to identify the sky direction of an event in space and alert conventional telescopes to follow-up for other sources of radiation. However, following up on prompt emissions would require a rapid and accurate localisation of such events, which will be key for joint observations in the future. The conventional method to accurately estimate the sky direction of gravitational waves is tedious—taking a few hours to days—while a faster online version needs only seconds. There is an emerging capacity from the LIGO-Virgo collaboration to detect gravitational waves from electromagnetic-bright binary coalescences, tens of seconds before their final merger, and provide alerts across the world. The goal is to coordinate prompt follow up observations with other telescopes around the globe to capture potential electromagnetic flashes within minutes from the mergers of two neutron stars, or a neutron star with a black hole—this was not possible before. The University of Western Australia’s SPIIR team is one of the world leaders in this area of research. Determining sky directions within seconds of a merger event is crucial,as most telescopes need to know where to point in the sky. In our recently accepted paper [1], led by three visiting students (undergraduate and Masters by research) at the OzGrav-UWA node, we applied analytical approximations to greatly reduce the computational time of the conventional localisation method while maintaining its accuracy. A similar semi-analytical approach has also been published in another recent study [2]. The results from this work show great potential and will be integrated into the SPIIR online pipeline going forward in the next observing run. We hope that this work complements other methods from the LIGO-Virgo collaboration and that it will be part of some exciting discoveries. Written by OzGrav PhD student Manoj Kovalam, University of Western Australia. [1] https://doi.org/10.1103/PhysRevD.104.104008 [2] https://doi.org/10.1103/PhysRevD.103.043011 This work is now accepted by PRD: https://journals.aps.org/prd/abstract/10.1103/PhysRevD.104.104008 The first evidence of the existence of black holes was found in the 1960s, when strong X-rays were detected from a system called Cygnus X-1. In this system, the black hole is orbited by a massive star blowing an extremely strong wind, more than 10 million times stronger than the wind blowing from the Sun. Part of the gas in this wind is gravitationally attracted towards the black hole, creating an ‘accretion disc’, which emits the strong X-rays that we observe. These systems with a black hole and a massive star are called ‘high-mass X-ray binaries’ and have been very helpful in understanding the nature of black holes. After nearly 60 years since the first discovery, only a handful of similar high-mass X-ray binaries have been detected. Many more of them were expected to exist, especially given that many binary black holes (the future states of high-mass X-ray binaries) have been discovered with gravitational waves in the past few years. There are also many binaries found in our Galaxy that are expected to eventually become a high-mass X-ray binary. So, we see plenty of both the predecessors and descendants, but where are all the high-mass X-ray binaries themselves hiding? One explanation states that even if a black hole is orbited by a massive star blowing a strong wind, it does not always emit X-rays. To emit X-rays, the black hole needs to create an accretion disc, where the gas swirls around and becomes hot before falling in. To create an accretion disc, the falling gas needs ‘angular momentum’, so that all the gas particles can rotate around the black hole in the same direction. However, we find it is generally difficult to have enough angular momentum falling onto the black hole in high-mass X-ray binaries. This is because the wind is usually considered to be blowing symmetrically, so there is almost the same amount of gas flowing past the black hole both clockwise and counter-clockwise. As a result, the gas can fall into the black hole directly without creating an accretion disc, so the black hole is almost invisible. But if this is true, why do we see any X-ray binaries at all? In our paper, we solved the equations of motion for stellar winds and we found that the wind does not blow symmetrically when the black hole is close enough to the star. The wind blows with a slower speed in the direction towards and away from the black hole, due to the tidal forces. Because of this break of symmetry in the wind, the gas can now have a large amount of angular momentum, enough to form an accretion disc around the black hole and shine in X-rays. The necessary conditions for this asymmetry are rather strict, so only a small fraction of black hole + massive star binaries will be able to be observed. The model in our study explains why there are only a small number of detected high-mass X-ray binaries, but this is only the first step in understanding asymmetric stellar winds. By investigating this model further, we might be able to solve many other mysteries of high-mass X-ray binaries. Written by OzGrav Postdoc Ryosuke Hirai, Monash University Melbourne-based astrophysicists launch colouring book encouraging more girls to become scientists6/12/2021 KEY POINTS:

The authors Debatri Chattopadhyay (Swinburne University) and Isobel Romero-Shaw (Monash University)—who are both completing their PhDs in astrophysics with OzGrav—are determined to educate children and young people about the pivotal scientific discoveries and contributions made by women scientists. They also want to encourage more girls, women, and minorities to take up careers in Science, Technology, Engineering, Mathematics and Medicine (STEMM), which is a male-dominated field. Debatri, who is originally from India, came to Australia pursuing her PhD at Swinburne University in 2017. She was acutely aware of the lack of women in STEMM fields, as both of her parents worked in biological sciences. “My father is a scientist, so I was aware that this was a field I could go into, and he would talk about amazing biologists like Barbara McClintock, but there was almost no representation of female scientists on TV or in newspapers,” she recalls. “This colouring book will help children learn about the colourful lives and brilliant minds of these amazing women scientists. As a colouring book, it encourages creative minds to think about scientific problems - which is very much needed for problem solving”, says Isobel, who designed the book and illustrated each of the featured scientists. “These women, who made absolutely pioneering discoveries, used their creativity to advance the world as we know it.” “I did intense research for the biographies of the women featured in the book and at every nook and crevice was amazed at the perseverance they showed. It is for them and countless others, unfortunately undocumented, that we can do what we do today,” says Debatri. “With Christmas approaching, this book is a perfect gift for young children who have a hunger for science. It’s both fun and educational!” she added. Last year, both Isobel and Debatri were also selected to participate in Homeward Bound, a global program designed to provide cutting-edge leadership training to 1000 women in STEMM over 10 years. To raise awareness of climate change, this journey will also take Isobel and Debatri all the way to Earth’s frozen desert, Antarctica. The initiative aims to heighten the influence and impact of women with a science background in order to influence policy and decision making as it shapes our planet. In 2017-18, OzGrav Chief Investigator Distinguished Prof Susan Scott was also selected to participate in this program and embark on the journey to Antarctica. “The saying that ‘you can’t be what you can’t see’ is addressed in this new colouring book,” says Distinguished Prof Scott. “The important women scientists depicted in the book come to life as role models as they are coloured in. Women are underrepresented in physics education and work in Australia. Educating children about women scientists throughout history is an important step in encouraging more girls and women to take up STEMM careers and boost diversity.” In their “day jobs”, Isobel tries to figure out how the collapsed remains of supergiant stars—black holes and neutron stars—meet up and crash together. She does this by studying the vibrations that these collisions send rippling through space-time—these are called gravitational waves. She also recently published an illustrated book, available on Amazon, called Planetymology: Why Uranus is not called George and other facts about space and words. Planetymology explains the ties between ancient history, astronomy, and language, and introduces the reader to the harsh realities of conditions on other planets. Debatri is involved in doing simulations in supercomputers of dead stars in binaries or in massive collections of other stellar systems - called globular clusters. Her detailed theoretical calculations help us to understand the astrophysics behind the observations of gravitational waves and radio pulsars, as well as predict what surprising observations might be made in the future. Debatri is also a trained Indian classical dancer and was a voluntary crew member of the Melbourne-based tall ship `Enterprize’. She has recently submitted her thesis and joined as a postdoctoral fellow at the Gravity Exploration Institute, Cardiff University. GIVEAWAY: To celebrate the launch of Women in Physics, OzGrav is giving away free copies of the colouring book to three lucky winners. Simply share the book via Twitter (re-tweet) and tag @ARC_OzGrav to be in the draw. Winners will be announced Monday 20 December! A new clue to discovering dark matter from mysterious clouds circling spinning black holes6/12/2021 Gravitational waves are cosmic ripples in the fabric of space and time that emanate from catastrophics events in space, like collisions of black holes and neutron stars--the collapsed cores of massive supergiant stars. Extremely sensitive gravitational-wave detectors on Earth, like the Advanced LIGO and Virgo detectors, have successfully observed dozens of gravitational-wave signals, and they’ve also been used to search for dark matter: a hypothetical form of matter thought to account for approximately 85% of all matter in the Universe. Dark matter may be composed of particles that do not absorb, reflect, or emit light, so they cannot be detected by observing electromagnetic radiation. Dark matter is material that cannot be seen directly, but we know that dark matter exists because of the effect it has on objects that we can observe directly. Ultralight boson particles are a new type of subatomic particle that scientists have put forward as compelling dark matter candidates. However, these ultralight particles are difficult to detect because they have extremely small mass and rarely interact with other matter -- which is one of the key properties that dark matter seems to have. The detection of gravitational waves provides a new approach to detecting these extremely light boson particles using gravity. Scientists theorise that if there are certain ultralight boson particles near a rapidly spinning black hole, the extreme gravity field causes the particles to be trapped around the black hole, creating a cloud around the black hole. This phenomenon can generate gravitational waves over a very long lifetime. By searching for these gravitational-wave signals, scientists can finally discover these elusive boson particles, if they do exist, and possibly crack the code of dark matter or rule out the existence of some types of the proposed particles. In a recent international study in the LIGO-Virgo-KAGRA collaboration, with OzGrav Associate Investigator Dr Lilli Sun from the Australian National University being one of the leading researchers, a team of scientists carried out the very first all-sky search tailored for these predicted gravitational wave signals from boson clouds around rapidly spinning black holes. “Gravitational-wave science opened a completely new window to study fundamental physics. It provides not only direct information about mysterious compact objects in the Universe, like black holes and neutron stars, but also allows us to look for new particles and dark matter,” says Dr Sun. Although a signal was not detected, the team of researchers were able to draw valuable conclusions about the possible presence of these clouds in our Galaxy. In the analysis, they also took into consideration that the strength of a gravitational wave signal depends on the age of the boson cloud: the boson cloud shrinks as it loses energy by sending out gravitational waves, so the strength of the gravitational wave signal would decrease as the cloud ages. “We learnt that a particular type of boson clouds younger than 1000 years is not likely to exist anywhere in our Galaxy, while such clouds that are up to 10 million years old are not likely to exist within about 3260 light-years from Earth,” says Dr Sun. “Future gravitational wave detectors will certainly open more possibilities. We will be able to reach deeper into the Universe and discover more insights about these particles”. Also featured on Cosmos Magazine and Sci Tech Daily. Many of the heaviest stars in the Universe will end their lives in a bright explosion, known as a supernova, which briefly outshines the rest of its host galaxy, allowing us to view these rare events out to great distances. At the lower end of this mass range, the supernova explosion will squeeze the core of the star into a dense ball of neutrons that is much denser than what can be reproduced in laboratories. So, scientists must rely on theoretical models and astronomical observations to study these objects, known as neutron stars. At the very low end of this range, the supernova explosions are thought to be weaker and dimmer, but even for state-of-the-art supernova simulations, it’s challenging to test this hypothesis. In our recently published study, we found a new way to test these weaker supernovae: by associating weaker supernova explosions with slowly moving neutron star remnants, neutron star speeds could accurately estimate the weaker supernovae, without the need for expensive simulations. Neutron stars don’t shine bright like other stars, but instead produce a very narrow beam of radio waves which may (if we’re lucky) point toward the Earth. As the neutron star rotates, the beam of light appears to flash on and off, creating a lighthouse effect. When this effect is observed, , we refer to it as a pulsating star, or pulsar. Recent advances in radio telescopes allow for precise measurements of pulsar velocities. We combined our measurements with simulations of millions of stars and found that the typically high pulsar speeds did not allow for many weak supernovae. However, there is a caveat: many of the massive stars that produce neutron stars are born in stellar binaries. If a normal supernova occurs in a stellar binary, the neutron star remnant will experience a large recoil kick—like a cannonball rushing away from the exploding gunpowder—and it will likely eject away from its companion star where it may later be observed as a single pulsar. But if the supernova is weak, the neutron star may not have enough energy to escape the gravitational tug of its companion star, and the stellar binary system will remain intact. This is a necessary step in the formation of neutron star binaries, so the existence of these binaries proves that some supernova explosions must be weak. We found that to explain both the existence of neutron star binaries and the absence of slow-moving pulsars, weak supernovae can only occur in very close stellar binaries, not in single, isolated stars. This is useful for modelling supernova simulations and adds to a growing body of research suggesting that weak supernovae may only happen in stellar binaries which have previously interacted with each other. Studies like this, which simulate many stars in relatively low detail, are key to understanding the effects of uncertain physics on stellar populations, which is unfeasible with highly-detailed simulations. Written by PhD student Reinhold Wilcox, Monash University With the growing catalogue of binary black hole mergers, researchers can study the overall spin properties of these systems to uncover how they formed and evolved. Recent work paints a conflicting picture of our understanding of the spin magnitudes and orientations of merging binary black holes, pointing to different formation scenarios. Our recent study, published in the Astrophysical Journal Letters, resolved these conflicts and allowed us to understand the spin distribution of binary black holes. Forming black hole binaries There are two main pathways to form a binary black hole: the first is via ‘isolated’ evolution, a process which involves the black hole binary being formed from the core collapse of two stars in a binary; the second is ‘dynamical’ evolution where interactions between black holes in dense stellar clusters can lead to a pair of black holes capturing each other to form a binary. These pathways show distinct features in the spin distribution of binary black hole mergers. Binaries formed via isolated evolution tend to have spins that are closely aligned with the orbital angular momentum, whereas dynamically formed systems have spins that are randomly orientated and have a distribution of spin tilts that is isotropic. In the latest population study from LIGO-Virgo, we saw evidence for both of these channels, however a more recent study by Roulet et. al 2021, showed that the population was consistent with the isolated channel alone. This inconsistency raises the question: how can we obtain different conclusions from the same population? The answer is model misspecification: The previous spin models were not designed to capture possible sharp features or sub-populations of spin in the model. The emerging picture of the spins of black hole binaries Using a catalogue of 44 binary black hole mergers, this new study finds evidence for two populations within the spin distribution of black hole binaries: one with negligible spins and the other moderately spinning with preferential alignment with the orbital angular momentum. This result can be fully explained via the isolated formation scenario. The progenitors of most black holes lose their angular momentum when the stellar envelope is removed by the binary companion, forming black hole binaries with negligible spin, while a small fraction of binaries have the second-born black hole spun up via tidal interactions.This study opens a number of interesting avenues to explore, for example, an investigation of the relationship between the mass and spin of these different subpopulations. Investigating such correlations can help improve the accuracy of our models and enable us to better distinguish between different evolution pathways of binary black holes. Written by OzGrav PhD student Shanika Galaudage, Monash University Recently published in ApJL https://iopscience.iop.org/article/10.3847/2041-8213/ac2f3c Key points:

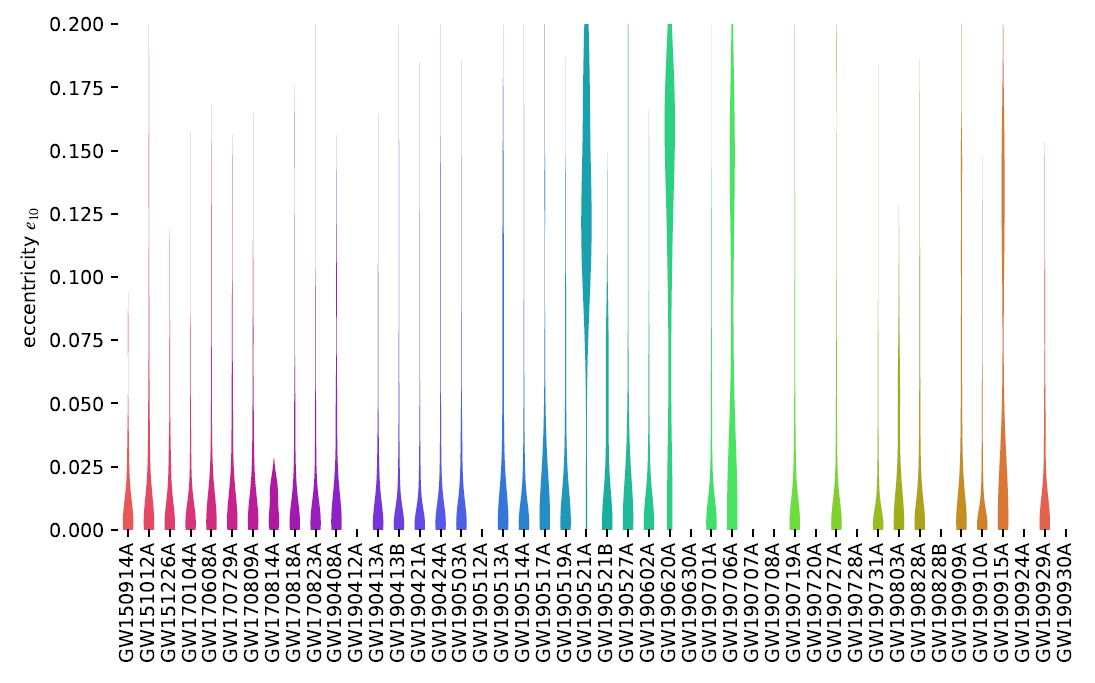

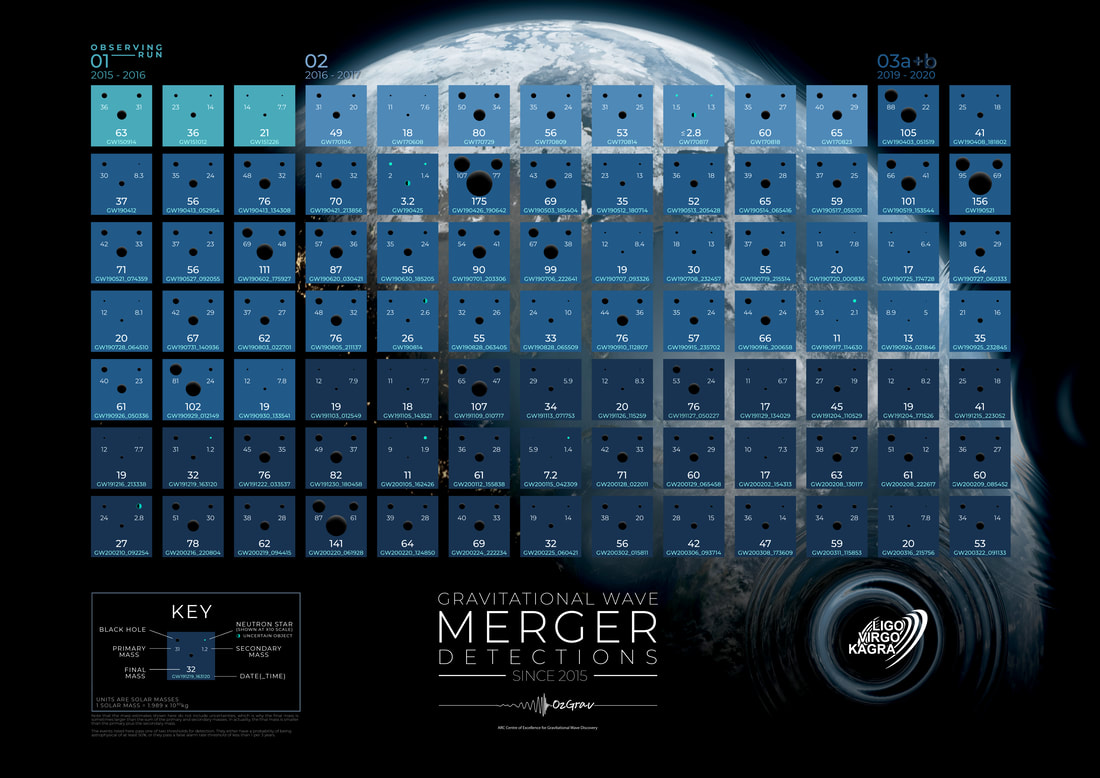

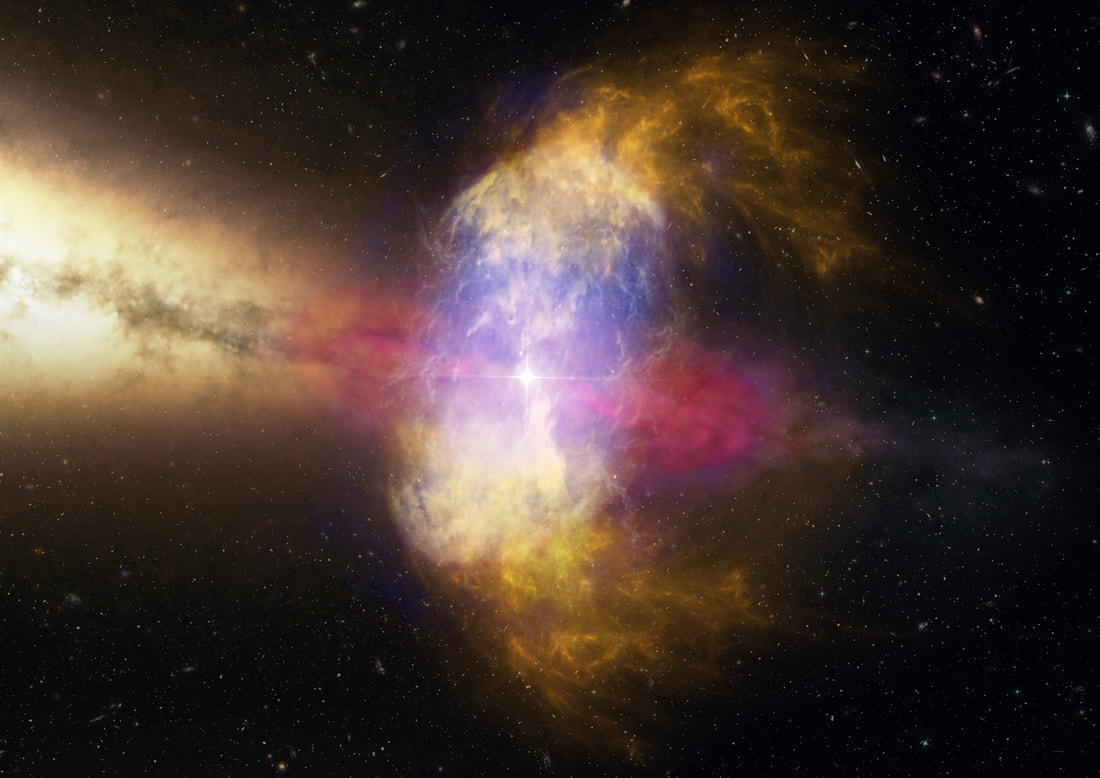

The gravitational-wave Universe is teeming with signals produced by merging black holes and neutron stars. In a new paper released today, an international team of scientists, including Australian OzGrav researchers, present 35 new gravitational wave observations, bringing the total number of detections to 90! All of these new observations come from the second part of observing run three, called “O3b”, which was an observing period that lasted from November 2019 to March 2020. There were 35 new gravitational wave detections in this period. Of these, 32 are most likely to come from pairs of merging black holes, 2 are likely to come from a neutron star merging with a black hole, and the final event could be either a pair of merging black holes or a neutron star and a black hole. The mass of the lighter object in this final event crosses the divide between the expected masses of black holes and neutron stars and remains a mystery. Dr Hannah Middleton, postdoctoral researcher at OzGrav, University of Melbourne, and co-author on the study says “Each new observing run brings new discoveries and surprises. The third observing run saw gravitational wave detection becoming an everyday thing, but I still think each detection is exciting!". Highlights Of these 35 new events, here are some notable discoveries (the numbers in the names are the date and time of the observation):

“It’s fascinating that there is such a wide range of properties within this growing collection of black hole and neutron star pairs”, says study co-author and OzGrav PhD student Isobel Romero-Shaw (Monash University). “Properties like the masses and spins of these pairs can tell us how they’re forming, so seeing such a diverse mix raises interesting questions about where they came from.” Not only can scientists look at individual properties of these binary pairs, they can also study these cosmic events as a large collection - or population. “By studying these populations of black holes and neutron stars we can start to understand the overall trends and properties of these extreme objects and uncover how these pairs came to be” says OzGrav PhD student Shanika Galaudage (Monash University) who was a co-author on a companion publication released today: ‘The population of merging compact binaries inferred using gravitational waves through GWTC-3 P2100239’. In this work, scientists analysed the distributions of mass and spin and looked for features which relate to how and where these extreme object pairs form. Shanika adds, “There are features we are seeing in these distributions which we cannot explain yet, opening up exciting research questions to be explored in the future”. Detecting gravitational waves: a complicated global effort Detecting and analysing gravitational-wave signals is a complicated task requiring global efforts. Initial public alerts for possible detections are typically released within a few minutes of the observation. Rapid public alerts are an important way of sharing information with the wider astronomy community, so that telescopes and electromagnetic observatories can be used to search for light from merging events - for example, merging neutron stars can produce detectable light. Says Dr Aaron Jones, co-author and postdoctoral researcher from The University of Western Australia, “It’s exciting to see 18 of those initial public alerts upgraded to confident gravitational wave events, along with 17 new events”. After thorough and careful data analysis, scientists then decipher the shortlist of gravitational-wave detections, delving into the properties of the systems that produced these signals. They use parameter estimation, a statistical technique to learn information about the black holes and neutron stars, such as their masses and spins, their location on the sky and their distance from the Earth. Future detections All of these detections were made possible by the global coordinated efforts from the LIGO (USA), Virgo (Italy) and KAGRA (Japan) gravitational-wave observatories. Between the previous observing runs, the detectors have been continually enhanced in small bursts which improves their overall sensitivity. Says Disha Kapasi, OzGrav student (Australian National University), “Upgrades to the detectors, in particular squeezing and the laser power, have allowed us to detect more binary merger events per year, including the first ever neutron star-black hole binary recorded in the GWTC-3 catalogue. This aids in understanding the dynamics and physics of the immediate universe, and in this exciting era of gravitational wave astronomy, we are constantly testing and prototyping technologies that will help us make the instruments more sensitive.” The LIGO and Virgo observatories are currently offline for improvements before the upcoming fourth observing run (O4), due to begin in August 2022 or later. The KAGRA observatory will also join O4 for the full run. More detectors in the network help scientists to better localise the origin or potential sources of the gravitational waves. “As we continue to observe more gravitational-wave signals, we will learn more and more about the objects that produce them, their properties as a population, and continue to put Einstein’s theory of General Relativity to the test,” says Dr Middleton. There is a lot to look forward to from gravitational-wave astronomy in O4 and beyond. But in the meantime, scientists will continue to analyse and learn from the data, searching for undiscovered types of gravitational waves, including continuous gravitational waves, and of course new surprises! Our Universe shines bright with light across the electromagnetic spectrum. While most of this light comes from stars like our Sun in galaxies like our own, we are often treated with brief and bright flashes that outshine entire galaxies themselves. Some of these brightest flashes are believed to be produced in cataclysmic events, such as the death of massive stars or the collision of two stellar corpses known as neutron stars. Researchers have long studied these bright flashes or ‘transients’ to gain insight into the deaths and afterlives of stars and the evolution of our Universe. Astronomers are sometimes greeted with transients that defy expectations and puzzle theorists who have long predicted how various transients should look. In October 2014, a long-term monitoring programme of the southern sky with the Chandra telescope—NASA’s flagship X-Ray telescope—detected one such enigmatic transient called CDF-S XT1: a bright transient lasting a few thousands of seconds. The amount of energy CDF-S XT1 released in X-rays was comparable to the amount of energy the Sun emits over a billion years. Ever since the original discovery, astrophysicists have come up with many hypotheses to explain this transient; however, none have been conclusive. In a recent study, a team of astrophysicists led by OzGrav postdoctoral fellow Dr Nikhil Sarin (Monash University) found that the observations of CDF-S XT1 match predictions of radiation expected from a a high-speed jet travelling close to the speed of light. Such “outflows” can only be produced in extreme astrophysical conditions, such as the disruption of a star as it gets torn apart by a massive black hole, the collapse of a massive star, or the collision of two neutron stars. Sarin et al’s study found that the outflow from CDF-S XT1 was likely produced by two neutron stars merging together. This insight makes CDF-S XT1 similar to the momentous 2017 discovery called GW170817—the first observation of gravitational-waves, cosmic ripples in the fabric of space and time—although CDF-S XT1 is 450 times further away from Earth. This huge distance means that this merger happened very early in the history of the Universe; it may also be one of the furthest neutron star mergers ever observed. Neutron star collisions are the main places in the Universe where heavy elements such as gold, silver, and plutonium are created. Since CDF-S XT1 occurred early on in the history of the Universe, this discovery advances our understanding of Earth’s chemical abundance and elements. Recent observations of another transient AT2020blt in January 2020—primarily with the Zwicky Transient Facility—have puzzled astronomers. This transient’s light is like the radiation from high-speed outflows launched during the collapse of a massive star. Such outflows typically produce higher energy gamma-rays; however, they were missing from the data – they were not observed. These gamma rays can only be missing due to one of three possible reasons: 1) The gamma-rays were not produced. 2) The gamma rays were directed away from Earth. 3) The gamma-rays were too weak to be seen. In a separate study, led again by OzGrav researcher Dr Sarin, the Monash University astrophysicists teamed up with researchers in Alabama, Louisiana, Portsmouth and Leicester to show that AT2020blt probably did produce gamma-rays pointed towards Earth, they were just really weak and missed by our current instruments. Dr Sarin says: “Together with other similar transient observations, this interpretation means that we are now starting to understand the enigmatic problem of how gamma-rays are produced in cataclysmic explosions throughout the Universe”. The class of bright transients collectively known as gamma-ray bursts, including CDF-S XT1, AT2020blt, and AT2021any, produce enough energy to outshine entire galaxies in just one second. “Despite this, the precise mechanism that produces the high-energy radiation we detect from the other side of the Universe is not known,” explains Dr Sarin. “These two studies have explored some of the most extreme gamma-ray bursts ever detected. With further research, we’ll finally be able to answer the question we’ve pondered for decades: How do gamma ray bursts work?”  Schematic representation of binary neutron star merger outcomes. Panels A and B: Two neutron stars merge as the emission of gravitational waves drives them towards one another. C: If the remnant mass is above a certain mass, it immediately forms a black hole. D: Alternatively, it forms a quasistable ‘hypermassive’ neutron star. E: As the hypermassive star spins down and cools it can not support itself against gravitational collapse and collapses into a black hole. F, G: If the remnant’s mass is sufficiently low, it will survive for longer, as a ‘supramassive’ neutron star, supported against collapse through additional support against gravity through rotation, collapsing into a black hole once it loses this support. H: If the remnant is born with small enough mass, it will survive indefinitely as a neutron star. Schematic from Sarin & Lasky 2021. Image credit: Carl Knox (Swinburne University). On 17th August 2017, LIGO detected gravitational waves from the merger of two neutron stars. This merger radiated energy across the electromagnetic spectrum, light that we can still observe today. Neutron stars are incredibly dense objects with masses larger than our Sun confined to the size of a small city. These extreme conditions make some consider neutron stars the caviar of astrophysical objects, enabling researchers to study gravity and matter in conditions unlike any other in the Universe. The momentous 2017 discovery connected several pieces of the puzzle on what happens during and after the merger. However, one piece remains elusive: What remains behind after the merger? In a recent article published in General Relativity and Gravitation, Nikhil Sarin and Paul Lasky, two OzGrav researchers from Monash University, review our understanding of the aftermath of binary neutron star mergers. In particular, they examine the different outcomes and their observational signatures. The fate of a remnant is dictated by the mass of the two merging neutron stars and the maximum mass a neutron star can support before it collapses to form a black hole. This mass threshold is currently unknown and depends on how nuclear matter behaves in these extreme conditions. If the remnant's mass is smaller than this mass threshold, then the remnant is a neutron star that will live indefinitely, producing electromagnetic and gravitational-wave radiation. However, if the remnant is more massive than the maximum mass threshold, there are two possibilities: if the remnant mass is up to 20% more than the maximum mass threshold, it survives as a neutron star for hundreds to thousands of seconds before collapsing into a black hole. Heavier remnants will survive less than a second before collapsing to form black holes. Observations of other neutron stars in our Galaxy and several constraints on the behaviour of nuclear matter suggest that the maximum mass threshold for a neutron star to avoid collapsing into a black hole is likely around 2.3 times the mass of our Sun. If correct, this threshold implies that many binary neutron star mergers go on to form more massive neutron star remnants which survive for at least some time. Understanding how these objects behave and evolve will provide a myriad of insights into the behaviour of nuclear matter and the afterlives of stars more massive than our Sun. Written by PhD student Nikhil Sarin, University of Adelaide  Illustration of binary neutron stars - Carl Knox, OzGrav-Swinburne University Illustration of binary neutron stars - Carl Knox, OzGrav-Swinburne University Binary neutron stars have been detected in the Milky Way as millisecond pulsars and twice outside the galaxy via gravitational-wave emission. Most of them have orbital periods of less than a day—a contrasting difference to their progenitors: massive stellar binaries that have hundreds or thousands of days orbital periods. In the last several decades, there has been much debate about explaining how massive binaries transition to double compact objects. To date, one of the strong contenders to explain this transition is the highly-complex stage of binary stellar evolution known as the common-envelope phase. The common-envelope phase is a particular outcome of a mass transfer episode. It begins with the Roche-lobe overflow of (at least) one of the stars, and it’s prompted by a dynamical instability. In a simple version, the stellar envelope of the mass-transferring star—the donor—bloats and engulfs the whole binary, creating a new system comprised of an inner compact binary, and a shared “common” envelope. The interaction of the inner binary with the common envelope results in drag, and the dissipated gravitational energy is transferred onto the common envelope, which can lead to its ejection. A successful ejection suggests that a compact binary can form. But what does a “successful ejection” mean? To explore the common-envelope phase with three-dimensional hydrodynamical models, we attempted to address the likely outcomes of common-envelope evolution by considering the response of a one-dimensional stellar model to envelope removal. In a recent study, we focussed on the common-envelope phase scenario of a donor star with a neutron star companion. We emulated the common-envelope phase by removing the envelope of the donor star, either partially or completely. After the star was stripped, we followed its radial evolution. The most extreme scenarios resulted as expected: If you remove all the envelope, the stripped star remains compact. Alternatively, if you leave most of the envelope, the stripped star subsequently expands a lot. The question is: what happens in between the extreme cases? Our research shows that when most of the envelope, but not all of it, is removed, the star experiences a short phase of marginal contraction (<100 years), but overall, the star remains compact during the next 1000 years. This suggests that a star doesn’t needs to be stripped all the way to the core to avoid an imminent stellar merger. Moreover, the amount of energy needed to partially strip the envelope is less than the one needed to fully remove it. Finally, it’s reassuring that our results show a strong correlation to variations in donor mass and composition. This research is a step forward in the understanding of the common envelope phase and the formation of double neutron star binaries. Our results imply that a star can be stripped without experiencing Roche lobe overflow immediately after the common envelope, a likely condition for a successful envelope ejection. It also suggests that stripped stars retain a few solar masses of peculiar, hydrogen-poor material in their surface. While this amount of hydrogen is not excessive, it might be observable in the spectra of a star and can play a role at the end of its life when it explodes into a supernova. While the full understanding of the common-envelope phase remains elusive, we are connecting the dots of the evolution and fate of systems that have experienced a common-envelope event. Written by OzGrav research Alejandro Vigna-Gómez from the Niels Bohr Institute (University of Copenhagen) Short gamma-ray bursts are extremely bright bursts of high-energy light that last for a couple of seconds. In many of these bursts, there is a mysterious material left behind: a prolonged ‘afterglow’ of radiation, including X-rays. Despite the efforts of many scientists over many years, we still don’t know where this afterglow comes from. In our recently accepted paper, we investigated a simple model that proposes a rotating neutron star—an extremely dense collapsed core of a massive supergiant star—as the engine behind a type of lengthy X-ray afterglows, known as X-ray plateaux. Using a sample of six short gamma-ray bursts with an X-ray plateau, we worked out the properties of the central neutron star and the mysterious remnant surrounding it. The model we used was inspired by remnants from young supernova. While remnants from short gamma-ray bursts and supernovae have many differences, the energy driving from a rotating neutron star has the same underlying physics. So, if the remnant of a short gamma-ray burst is a neutron star, it must have a similar energy outflow as a supernova remnant. In our study, we borrowed the basic physics from previous short gamma-ray burst models to predict the luminosity and duration of the X-ray plateau. For each short gamma-ray burst, the results suggested that the remnant neutron star is a millisecond magnetar: a neutron star with an extraordinarily powerful magnetic field. All known magnetars have a very slow rotation frequency; similarly, all observed neutron stars with millisecond spins have weak magnetic fields. This gap in observations isn’t surprising because the magnetic field of the star converts the rotational energy into electromagnetic energy. For a magnetar-strength field, this process happens on a scale from seconds to days – exactly the duration of most X-ray plateaux. This paper is the first attempt at estimating the source of X-ray afterglows using this kind of model. As the model matures and further data is collected, we’ll be able to make stronger conclusions about the source of X-ray plateaux and, if we’re lucky, discover what these mysterious remnants are. Written by OzGrav PhD student Lucy Strang – The University of Melbourne Direct link to online publications, a journal citation or any other websites https://arxiv.org/pdf/2107.13787.pdf Current status of paper (submitted/accepted/published) Accepted Acceptance and/or publication date Accepted 27/07/21, publication TBC In the 2030’s, gravitational-wave detectors will be thousands of times more sensitive than Advanced LIGO, Virgo, and KAGRA. The network of “third generation” (3G) observatories will almost certainly include Cosmic Explorer (US), Einstein Telescope (EU), and may include a Southern-hemisphere Cosmic-Explorer like observatory. These amazing instruments will see every binary neutron star merger in the Universe, and most binary black holes out to redshifts beyond 10: hundreds of thousands, possibly millions, of resolvable signals per year. Many of these signals will be extremely loud, with signal to noise ratios in the thousands, facilitating breakthroughs in fundamental physics and cosmology. And herein lies a challenge! How do we extract all the information from these signals? On the surface it seems like a straightforward task: just keep on running parameter estimation like we’re already doing! But it turns out that our current parameter estimation methods don’t scale so well when signals are really loud, and very long in band.

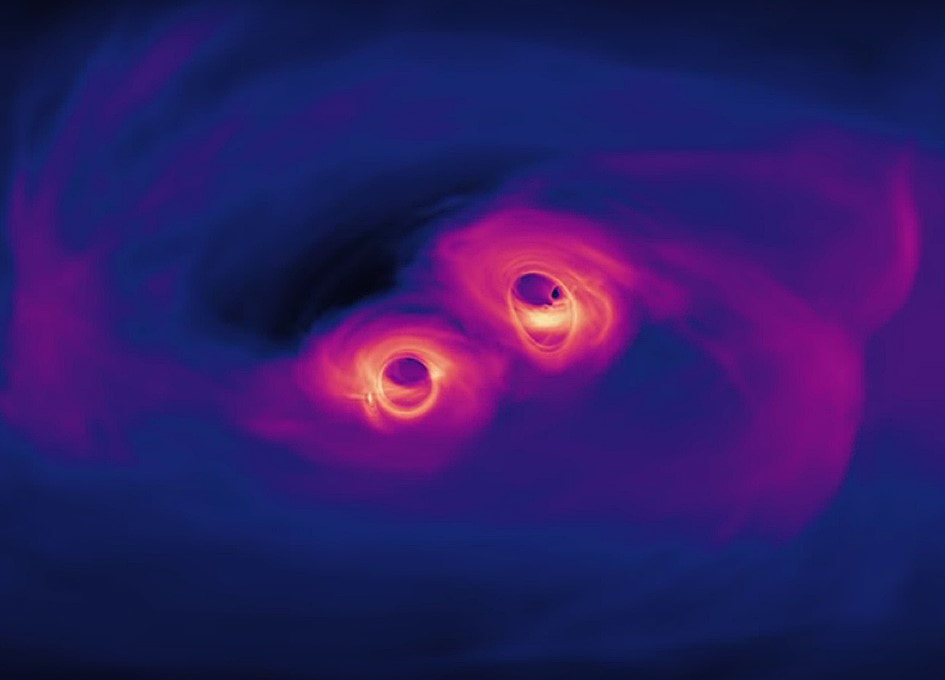

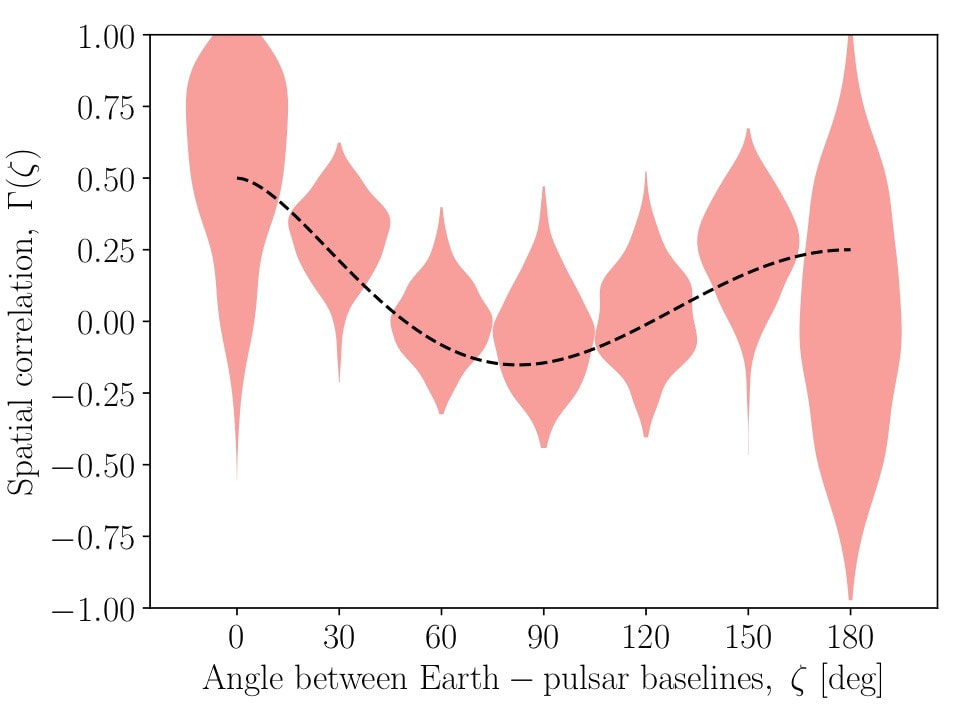

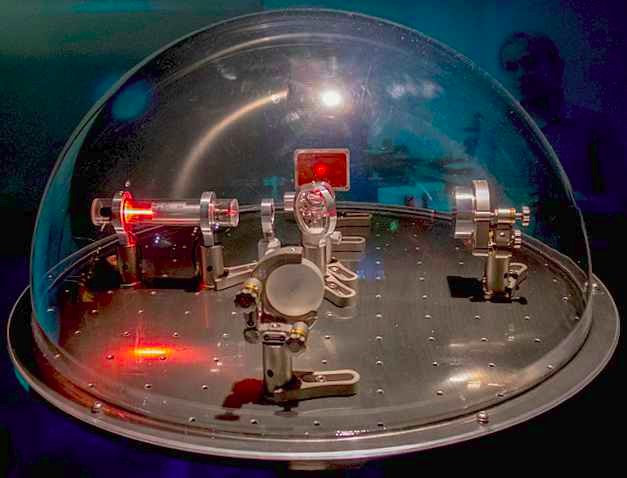

To see why, we imagined a binary neutron star merger signal “GW370817”, which originated about 40 Mpc from Earth — roughly the distance of GW170817 (assuming 3G detectors are online in 2037, we’re guaranteed to observe a thousand or so binary neutron star mergers on August 17th, 2037!). A network of 3G detectors would observe GW370817 for 90 minutes, with a staggering signal to noise ratio of 2500. Analysing this signal is around a thousand times more computationally expensive than analysing a signal in today’s detectors — by our back of the envelope estimates, it would take around 1000 years! This prohibitive analysis time is a hurdle to astrophysics with 3G data, and it’s the problem we solve in our paper. To drive down the computation time, we developed “reduced order models” of gravitational-wave signals which allow us to infer binary neutron star properties using heavily compressed data, with almost no loss in accuracy. We reduced the computational cost of inference on 3G data by a factor of 13’000. Together with a pinch of parallel computing, we’re able to perform data analysis in a few hours. This is good news for astrophysics in the 3G era. While the 2030’s and 3G detectors are a few years away, our results and methods are useful for a wide range of theoretical and design studies, which are ramping up in lockstep with the development of the detector technology. For those old enough to remember, the first LISA mock-data challenges began in 2005, which gives a sense of how much exploratory work takes place before a detector is operational. For the time being, there are plenty of interesting astrophysics questions we can start to think about in the context of 3G detectors: how well will we be able to measure the neutron star equation of state and the maximum mass of neutron stars? And what will this tell us about extreme matter? How well can neutron star spins be measured and can this tell us anything about supernova mechanisms? etc…Our results and method will facilitate this kind of theoretical work by enabling us to perform robust inferences on binary neutron star properties in mock 3G data. Link to research paper: https://arxiv.org/abs/2103.12274 Written by Rory Smith, Monash University Galaxies host supermassive black holes, which weigh millions to billions times more than our Sun. When galaxies collide, pairs of supermassive black holes at their centres also lie on the collision course. It may take millions of years before two black holes slam into each other. When the distance between them is small enough, the black hole binary starts to produce ripples in space-time, which are called gravitational waves. Gravitational waves were first observed in 2015, but they were detected from much smaller black holes, which weigh like tens times our Sun. Gravitational waves from supermassive black holes are still a mystery to scientists. Their discovery would be invaluable to figuring out how galaxies and stars form and evolve, and finding the origin of dark matter. A recent study led by Dr Boris Goncharov and Prof Ryan Shannon—both researchers from the ARC Centre of Excellence for Gravitational Wave Discovery (OzGrav)—has tried to solve this puzzle. Using the most recent data from the Australian experiment known as the Parkes Pulsar Timing Array, the team of scientists searched for these mystery gravitational waves from supermassive black holes. The experiment observed radio pulsars: extremely dense collapsed cores of massive supergiant stars (called neutron stars) that pulse out radio waves, like a lighthouse beam. The timing of these pulses is extremely precise, whereas the background of gravitational waves advances and delays pulse arrival times in a predicted pattern across the sky, by around the same amount in all pulsars. The researchers now found that arrival times of these radio waves do show deviations with similar properties as we expect from gravitational waves However, more data is needed to conclude whether radio wave arrival times are correlated in all pulsars across the sky, which is considered the “smoking gun”. Similar results have also been obtained by collaborations based in North America and Europe. These collaborations, along with groups based in India, China, and South Africa, are actively combining datasets under the International Pulsar Timing Array, to improve the sky coverage. This discovery is considered a precursor to the detection of gravitational waves from supermassive blackholes. However, Dr Goncharov and colleagues pointed out that the observed variations in the radio wave arrival times might also be due to ipulsar-intrinsic noise. Dr Goncharov said: “To find out if the observed "common" drift has a gravitational wave origin, or if the gravitational-wave signal is deeper in the noise, we must continue working with new data from a growing number of pulsar timing arrays across the world”. Gravitational wave scientists have designed and built an interactive science exhibit modeled of a real-life gravitational-wave detector to explain gravitational-wave science. It was developed by an international team, which includes researchers now at the OzGrav ARC Centre of Excellence for Gravitational Wave Discovery (OzGrav). The recently published research paper is now featured in the American Journal of Physics and the exhibit, which is called a Michelson interferometer, is on long-term display at the Thinktank Birmingham Science Museum in the UK. The project has a lasting international impact with online instructions and parts lists available for others to construct their own versions of the exhibit. Observations of gravitational waves -- ripples in the fabric of space and time -- have sparked increased public interest in this area of research. The effect of gravitational waves is a stretching and squashing of distances between objects. Real-life observatories are large complex devices based on the Michelson interferometer that use laser light to search for passing gravitational waves.

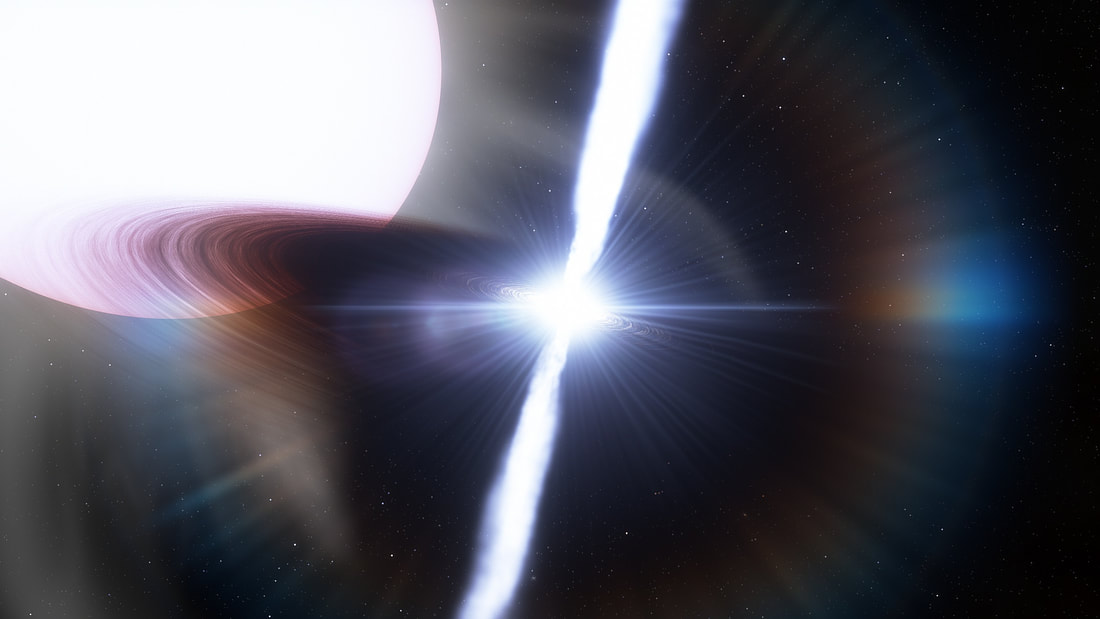

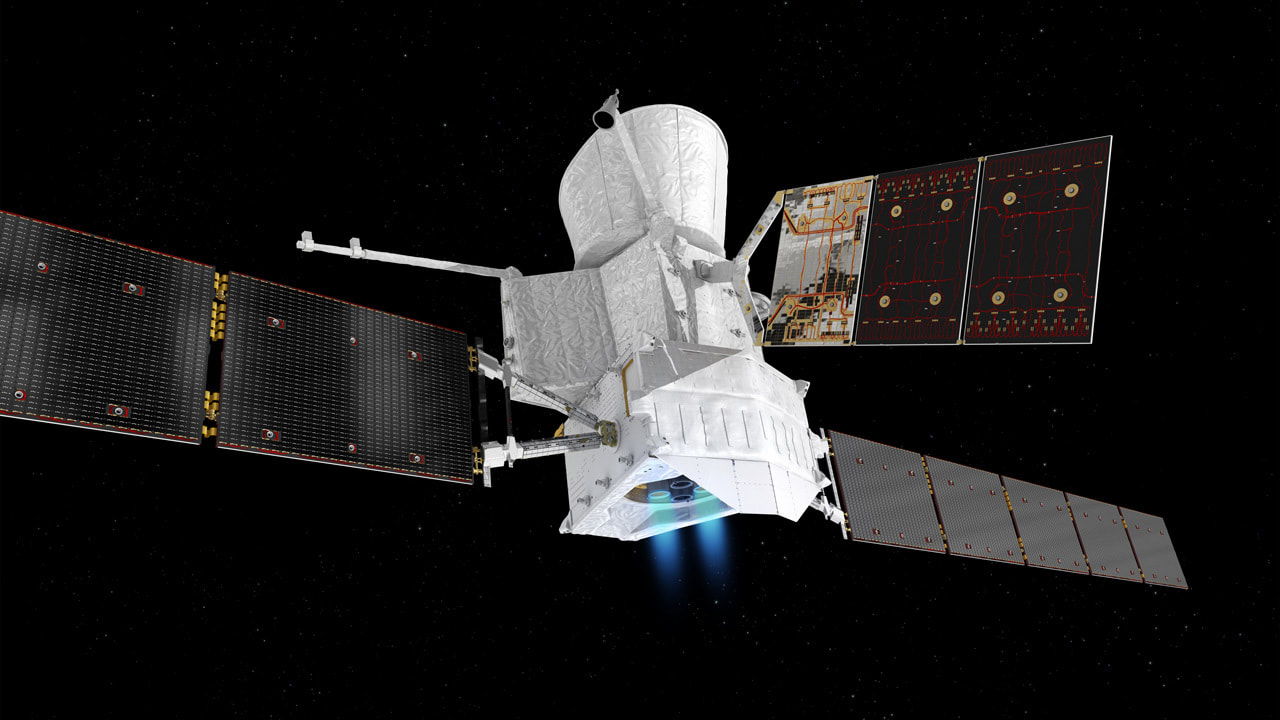

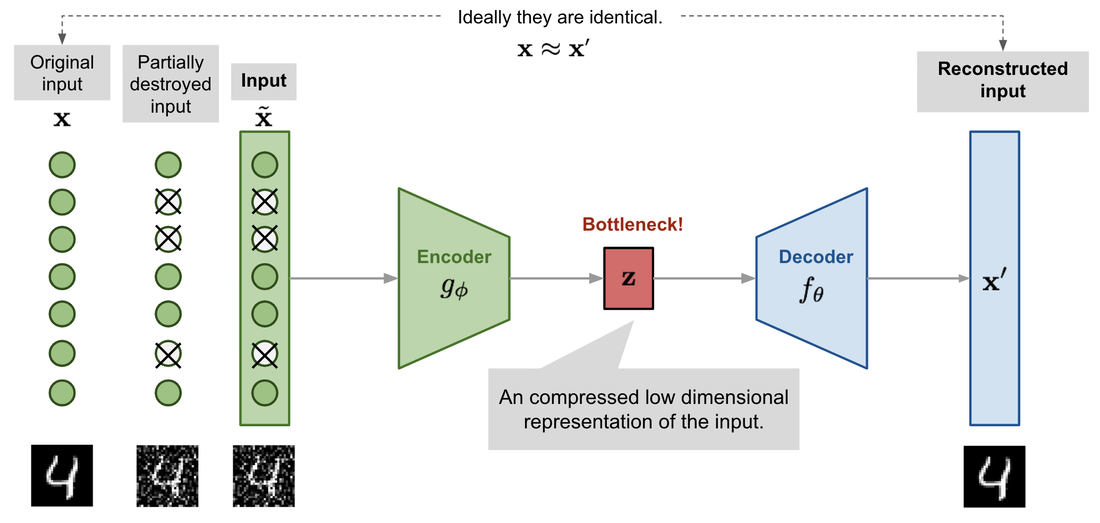

In a Michelson interferometer, laser light is split into two perpendicular beams by a beam-splitter; the beams of laser light travelling down the detector arms reflect off mirrors back to the beam-splitter where they recombine and produce an interference pattern. If the relative length of the arms changes, the interference pattern will change. The exhibit model cannot detect gravitational waves, but it’s extremely sensitive to vibrations in the room! The Michelson interferometer exhibit has an attractive high-shine design, using lab-grade optics and custom-made components, drawing people in to take a closer look. A list of all the parts used in the intricate design is available on the official website -- the creators are continuing to investigate low-cost designs using laser pointers and building blocks. At science fairs, experts are normally present to explain the items on display; however, this is not the case in a museum. ‘Exhibits need to be easily accessible with self-guided learning,’ explains OzGrav postdoc Dr Hannah Middleton, one of the project leads from the University of Melbourne. ‘We’ve developed custom interactive software for the exhibit through which a user can access explanatory videos, animations, images, text, and a quiz. Users can also directly interact with the interferometer by pressing buttons to input a simulated gravitational wave, and produce a visible change in the interference pattern.’ BepiColombo is a joint mission between the European Space Agency (ESA) and the Japan Aerospace Exploration Agency (JAXA) designed to study the planet Mercury. Launched in late 2018, its complex trajectory involved a fly-by past Earth on April 10, 2020. We took advantage of the event to organise a coordinated observing campaign. The main goal was to compute and compare the observed fly-by orbit properties with the values available from the Mission Control. The method we designed could then be improved for future observation campaigns targeting natural objects that may collide our planet. The incoming trajectory of the probe limited the ground-based observability to only a few hours, around the time when it was closest to Earth. The network of telescopes we used has been developed by ESA’s NEO Coordination Centre (NEOCC) with capabilities to quickly observe imminent impactors, thus presenting similar orbits. Our team successfully acquired the target with various instruments such as the 6ROADS Chilean telescope, the 1.0 m Zadko telescope in Australia, the ISON network of telescopes, and the 1.2 m Kryoneri telescope in Corinthia, Greece. The observations were difficult due to the object’s extremely fast angular motion in the sky. At one point, the telescopes saw the probe covering twice the size of the moon in the sky each minute. This challenged the tracking capabilities and timing accuracy of the telescopes. Each telescope was moving at the predicted instantaneous speed of the target while taking images, "tracking" the spacecraft. Field stars appeared as trails, while BepiColombo itself was a point source, but only if the observation started exactly at the right moment. Because the probe was moving so fast, any date errors of the telescope images translate into position errors of the probe. To reach a precise measurement of 0.1 metres, the date of the images needed to have a precision of 100 milliseconds. The final results were condensed into two measurable quantities that could be directly compared with the Mission Control ones, the perigee distance, and the time of the probe’s closest approach to Earth. Both numbers were perfectly matched, proving our method a success: it calculated a more accurate prediction of BepiColombo’s orbit; it also provided valuable insights for future observations of objects colliding with Earth: • A purely optical observing campaign can provide trajectory information during a fly-by at sub-kilometre and sub-second levels of precision. • A similar campaign would lead to a sub-kilometre and sub-second precision for the time and location of the atmospheric entry of any colliding object. • Timing accuracy below 100 milliseconds is crucial for the closest observations. • It’s possible to organise astrometric campaigns with coverage from nearly every continent. Link to research paper: https://doi.org/10.1016/j.actaastro.2021.04.022 Written by OzGrav researcher Dr Bruce Gendre, University of Western Australia. From gravitational wave science to global technology company: Liquid Instruments is a Canberra start-up bringing NASA technology to the world. Liquid Instruments (LI) Pty Ltd, a spin-off company from the Australian National University (ANU), is revolutionising the $17b test and measurement market. Test and Measurement devices are used by scientists and engineers to measure, generate and process the electronic signals that are fundamental to the photonics, semiconductor, aerospace and automotive industries. The LI team has raised more than $25M USD in Venture Capital investment, and now has more than 1000 users in 30 countries. LI was founded by researchers from the gravitational wave group at ANU to commercialise advanced instrumentation technology derived from both ground and space-based gravity detectors. OzGrav Chief Investigator Daniel Shaddock (ANU), CEO of Liquid Instruments, began as an engineer at NASA’s Jet Propulsion Laboratory in 2002, working on the Laser Interferometer Space Antenna (LISA), a joint project between NASA and the European Space Agency. The work on LISA’s phasemeter was the genesis for forming Liquid Instruments. LI’s software-enabled hardware employs advanced digital signal processing to replace multiple pieces of conventional equipment at a fraction of the cost and with a drastically improved user experience. Their first product Moku:Lab provides the functionality of 12 instruments in one simple integrated unit. On 23 June, the company launched two new hardware devices, the Moku:Go—an engineering lab in a backpack for education, and the Moku:Pro – a multi-GHz device for professional scientists and engineers. Lik the Moku:Lab, this revolutionary new hardware includes a suite of instruments with robust hardware features giving a breakthrough combination of performance and versatility. Daniel Shaddock says: “Moku:Pro takes software defined instrumentation to the next level with more than 10x improvements in many dimensions. Moku:Pro is a new weapon for scientists. Moku:Go takes all the great features of Moku:Lab but reduces the cost by 10x to make it more accessible than ever before. We hope it will help train the next generation of scientists and engineers in universities around the world. www.liquidinstruments.com ‘Type Ia’ supernovae involve an exploding white dwarf close to its Chandrasekhar mass. For this reason, type Ia supernova explosions have almost universal properties and are an excellent tool to estimate the distance to the explosion, like a cosmic distance ladder. Collapsing massive stars will form a different kind of supernova (type II) with more variable properties, but with comparable peak luminosities. To date, the most luminous events occur in core-collapse supernovae in a gaseous environment, when the circumstellar medium near the explosion transforms the kinetic energy into radiation and thus increases the luminosity. The origin of the circumstellar material is usually the stellar wind from the massive star’s outer layers as they’re expelled prior to the explosion. A natural question is how will type Ia supernovae look like in a dense gaseous environment? And what is the origin of the circumstellar medium in this case? Will they also be more luminous than their standard siblings? To address this question, OzGrav researchers Evgeni Grishin, Ryosuke Hirai, and Ilya Mandel, together with an international team of scientists, studied explosions in dense accretion discs around the central regions of active galactic nuclei. They constructed an analytical model which yields the peak luminosity and lightcurve for various initial conditions, such as the accretion disc properties, the mass of the supermassive black hole, and the location and internal properties of the explosion (e.g. initial energy, ejecta mass). The model also used suites of state-of-the-art radiation hydrodynamical simulations. The explosion generates a shock wave within the circumstellar medium, which gradually propagates outward. Eventually, the shock wave reaches a shell that is optically thin enough, such that the photons can ‘breakout’. The location of this breakout shell and the duration of the photon diffusion determine the lightcurve properties. If the amount of the circumstellar medium is much smaller than the ejecta mass, the lightcurves look very similar to type Ia supernovae. Conversely, a very massive circumstellar mass can choke the explosion and it will not be seen. The sweet spot lies somewhere in between, where the ejecta mass is roughly comparable to the amount of circumstellar material. In the latter case, the peak luminosity 100 times bigger than the standard type Ia Supernovae, which makes it one of the brightest supernova events to date. The research paper describing this work (Grishin et al., “Supernova explosions in active galactic nuclear discs”) was recently published in Monthly Notices of the Royal Astronomical Society. The luminous explosions may be observed in accretion discs of accretion rate, or in galaxies with smaller supermassive black hole masses where background active galactic nucleus activity will not hinder observations with advanced instruments. The underlying physical processes of photon diffusion and shock breakout can be creatively explained with poetry: All of a sudden, the heat is intense. We must cool down, but the path is opaque. Every direction around is so dense, Which one should the photons take? They have to break out, for God's sake... At first, they are stuck, no matter the way, They sway side to side, they randomly walk. The leader in front leads them astray, How hogtied is this radiant flock... But wait, do you also gaze at the shock? The ominous furnace is starting to snap, Its violent grip getting frail. The path is now clear, the direction is "up!" We're sitting on the shock front's tail. We're seizing the shock, we'll prevail! The shock front behind us, but we're still out of place, We propel with incredible might. We keep on ascending, increasing the pace, Any particle is now out of sight, In this vacuum, we're free from inside, And can travel as fast as the light. Written by OzGrav researcher Evgeni Grishin, Monash University One of the major challenges involved in gravitational wave data analysis is accurately predicting properties of the progenitor black hole and neutron star systems from data recorded by LIGO and Virgo. The faint gravitational wave signals are obscured against the instrumental and terrestrial noise. LIGO and Virgo use data analysis techniques that aim to minimise this noise with software that can ‘gate’ the data – removing parts of the data which are corrupted by sharp noise features, called ‘glitches’. They also use methods that extract the pure gravitational-wave signal from noise altogether. However, these techniques are usually slow and computationally intensive; they’re also potentially detrimental to multi-messenger astronomy efforts, since observation of electromagnetic counterparts of binary neutron star mergers—like short-gamma ray bursts—relies heavily on fast and accurate predictions of the sky direction and masses of the sources. In our recent study, we’ve developed a deep learning model that can extract pure gravitational wave signals from detector data at faster speeds, with similar accuracy to the best conventional techniques. As opposed to traditional programming, which uses a set of instructions (or code) to perform, deep learning algorithms generate predictions by identifying patterns in data. These algorithms are realised by ‘neural networks’ – models inspired by the neurons in our brain and are ‘trained’ to generate almost accurate predictions on data almost instantly. The deep learning architecture we designed, called a ‘denoising autoencoder’, consists of two separate neural networks: the Encoder and the Decoder. The Encoder reduces the size of the noisy input signals and generates a compressed representation, encapsulating essential features of the pure signal. The Decoder ‘learns’ to reconstruct the pure signal from the compressed feature representation. A schematic diagram of a denoising autoencoder model is shown in Figure 1. For the Encoder network, we’ve included a Convolutional Neural Network (CNN) which is widely used for image classification and computer vision tasks, so it’s efficient at extracting distinctive features from data. For the Decoder network, we used a Long Short-Term Memory (LSTM) network—it learns to make future predictions from past time-series data. Our CNN-LSTM model architecture successfully extracts pure gravitational wave signals from detector data for all ten binary-black hole gravitational wave signals detected by LIGO-Virgo during the first and second observation runs. It’s the first deep learning-based model to obtain > 97% match between extracted signals and ‘ground truth’ signal ‘templates’ for all these detected events. Proven to be much faster than current techniques, our model can accurately extract a single gravitational wave signal from noise in less than a milli-second (compared to a few seconds by other methods). The data analysis group of OzGrav-UWA is now using our CNN-LSTM model with other deep learning models to predict important gravitational wave source parameters, like the sky direction and ‘chirp mass’. We’re also working on generalising the model to accurately extract single signals from low-mass black hole binaries and neutron star binaries. https://arxiv.org/abs/2105.03073 Paper status: Accepted. Written by OzGrav researcher Chayan Chatterjee, UWA A newly discovered astronomical phenomenon was revealed in a globally coordinated announcement of not one, but two events witnessed last year: the death spiral and merger of two of the densest objects in the Universe—a neutron star and a black hole. The discovery of these remarkable events, which occurred before the time of the dinosaurs but only just reached Earth, will now allow researchers to further understand the nature of the space-time continuum and the building blocks of matter. The discoveries were made by the Laser Interferometer Gravitational-Wave Observatory (LIGO) in the US, and the Virgo gravitational-wave observatory in Italy, with significant involvement from Australian researchers. According to Dr Rory Smith—from the ARC Centre of Excellence for Gravitational-Wave Discovery (OzGrav) and Monash University, and the international co-lead on the paper published in the journal Astrophysical Journal Letters—the discoveries are a milestone for gravitational-wave astronomy. “Witnessing these events opens up new possibilities to study the fundamental nature of space-time, and matter at its most extreme,” Dr Smith said. The first observation of a neutron star-black hole system was made on Jan 5th, 2020 when gravitational waves—tiny ripples in the fabric of space and time—were detected from the merger of the neutron star with the black hole by LIGO and Virgo. Detailed analysis of the gravitational waves reveal that the neutron star was around twice as massive as the Sun, while the black hole was around nine times as massive as the Sun. The merger itself happened around a billion years ago, before the first dinosaurs appeared on Earth. Remarkably, on January 15th 2020, another merger of a neutron star with a black hole was observed by LIGO and Virgo using gravitational waves. This merger also took place around a billion years ago, but the system was slightly less massive: the neutron star was around one and a half times as massive as the Sun, while the black hole was around five and a half times as massive. Dr. Smith explains the significance: “Astronomers have been searching for neutron stars paired with black holes for decades because they’re such a great laboratory to test fundamental physics. Mergers of neutron stars with black holes dramatically warp space-time—the fabric of the Universe—outputting more power than all the stars in the observable Universe put together. The new discoveries give us a glimpse of the Universe at its most brilliant and extreme. We will learn a great deal about the fundamental nature of space-time and black holes, how matter behaves at the highest possible pressures and densities, how stars are born, live, and die, and how the Universe has evolved throughout cosmic time”. The discovery involved an international team of thousands of scientists, with Australia playing a leading role. “From the design and operation of the detectors to the analysis of the data, Australian scientists are working at the frontiers of astronomy,” Dr Smith added. Black holes and neutron stars are two of the most extreme objects ever observed in the Universe—they are born from exploding massive stars at the end of their lives. Typical neutron stars have a mass of one and a half times the mass of the Sun, but all of that mass is contained in an extremely dense star, about the size of a city. The star is so dense that atoms cannot sustain their structure as we normally perceive them on Earth. Black holes are even more dense objects than neutron stars: they have a lot of mass, normally at least three times the mass of our Sun, in a tiny amount of space. Black holes contain an “event horizon” at their surface: a point of no return that not even light can escape. “Black holes are a kind of cosmic enigma,” explained Dr Smith. “The laws of physics as we understand them break down when we try to understand what is at the heart of a black hole. We hope that by observing gravitational waves from black holes merging with neutron stars, or other black holes, we will begin to unravel the mystery of these objects.” When LIGO and Virgo observe neutron stars merging with black holes, they are orbiting each other at around half the speed of light before they collide. “This puts the neutron star under extraordinary strain, causing it to stretch and deform as it nears the black hole. The amount of stretching that the neutron star can undergo depends on the unknown form of matter that they’re made of. Remarkably, we can measure how much the star stretches before it disappears into the black hole, which gives us a totally unique way to learn about the building blocks of protons and neutrons,” Dr Smith said. "Using one of the most powerful Australian supercomputers and the most accurate solutions to Einstein's famous field equations known to date, we were able to measure the properties of these collisions, such as how heavy the neutron stars and black holes are, and how far away these events were," he said. Publication in Astrophysical Journal (ApJL)

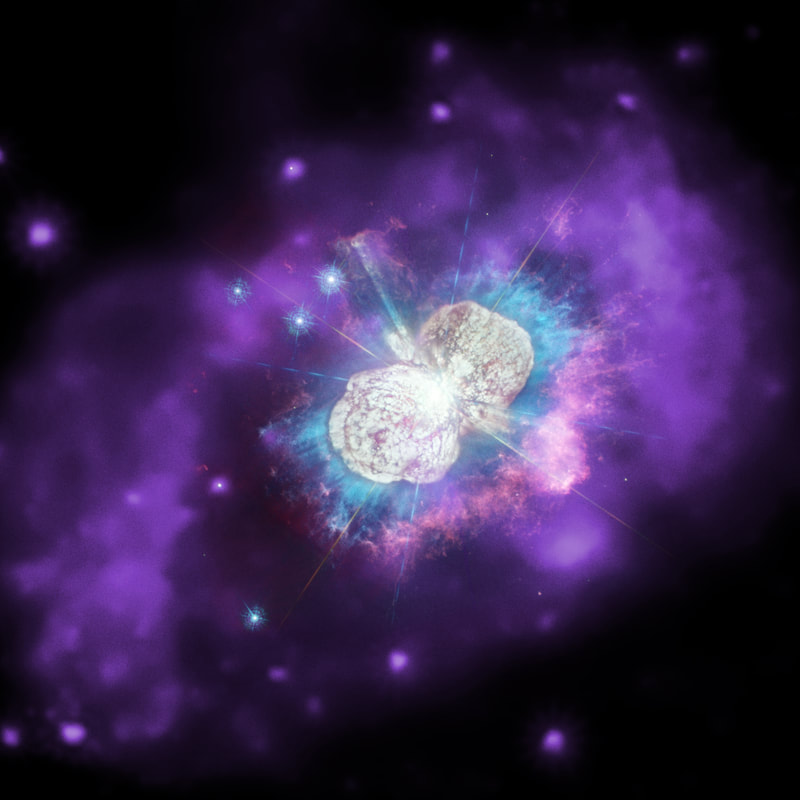

Also featured in The Australian , The Financial Review , The ABC , ABC radio , ABC Breakfast radio , Cosmos magazine , Channel 10 news , The Conversation and more.  Shells of material surround the stars of Eta Carinae. A Gamma-ray burst coming from those stars should release large amounts of light as it collides with the denser medium. Image credit: X-ray: NASA/CXC; Ultraviolet/Optical: NASA/STScI; Combined Image: NASA/ESA/N. Smith (University of Arizona), J. Morse (BoldlyGo Institute) and A. Pagan Shells of material surround the stars of Eta Carinae. A Gamma-ray burst coming from those stars should release large amounts of light as it collides with the denser medium. Image credit: X-ray: NASA/CXC; Ultraviolet/Optical: NASA/STScI; Combined Image: NASA/ESA/N. Smith (University of Arizona), J. Morse (BoldlyGo Institute) and A. Pagan Gamma-ray bursts are enormous cosmic explosions and are one of the brightest and most energetic events in the Universe. Their brightness changes over time, illuminating deep space like a flashlight shining into a dark room. Intense radiation emitted from most observed gamma-ray bursts is predicted to be released during a supernova as a star implodes to form a neutron star or a black hole. In the recently observed gamma-ray burst event called GRB 160203A, remains of the explosion started glowing much brighter than expected, according to standard scientific models, even several hours after the initial flash. We now believe that this “rebrightening” was caused by the main body of the burst crashing through shells of material ejected by the source star, or interstellar “knots”. Both theories suggest that the standard gamma-ray burst model needs to be re-examined, and perhaps the surrounding space isn’t as smooth and uniform as originally predicted. In our study, we began collecting reports from all over the world that observed the gamma-ray burst event, including the archives of the Zadko research telescope. By carefully calibrating the data from different sources and comparing the different brightness over time, we unpacked the surrounding galaxy and defined key characteristics of the burst: the temporal index (how quickly it fades over time), the spectral index (the overall colour of the burst), and the extinction (how much light is absorbed by the matter between here, on Earth, and the burst). One surprising finding was that the density of the burst’s host galaxy is unusually dense – about the same as our own galaxy, the Milky Way. The next step was to see how and when the data moved away from the model. With further calculations, we identified three interesting time periods that indicated significant brightness differences compared to the model’s prediction. Although the third period was probably a coincidence, the first and second periods were too large to ignore. Normally, rebrightening is caused by something happening to the host galaxy(?), such as suddenly collapsing into a black hole; however, these kinds of events normally happen within the first few minutes of a gamma-ray burst – in this event, the first rebrightening didn’t start until three hours after the initial explosion. As a result, we decided to expand the conventional model of gamma-ray bursts to explain this unusual event. One of the properties of such events is the relationship between the density of the medium and the intensity of radiation emitted from the explosion. What’s particularly convincing about this explanation is its applicability to many contexts. As stars prepare to explode into supernovas and gamma-ray bursts, they eject their outer shells into the surrounding space. For bursts that don’t come from supernovas, these changes in brightness could be the result of turbulence in the interstellar medium. In either case, the change in brightness gives us a new tool to probe the structure of distant space, and we are now eagerly anticipating another burst with similar features to put our new model to the test. Written by OzGrav PhD student Hayden Crisp, University of Western Australia What happens if a supernova explosion goes off right beside another star? The star swells up which scientists predict as a frequent occurrence in the Universe. Supernova explosions are the dramatic deaths of massive stars that are about 8 times heavier than our Sun. Most of these massive stars are found in binary systems, where two stars closely orbit each other, so many supernovae occur in binaries. The presence of a companion star can also greatly influence how stars evolve and explode. For this reason, astronomers have long been searching for companion stars after supernovae-- a handful have been discovered over the past few decades and some were found to have unusually low temperatures. When a star explodes in a binary system, the debris from the explosion violently strikes the companion star. Usually there’s not enough energy to damage the whole star, but it heats up the star’s surface instead. The heat then causes the star to swell up, like having a huge burn blister on your skin. This star blister can be 10 to 100 times larger than the star itself. The swollen star appears very bright and cool, which might explain why some discovered companion stars had low temperatures. Its inflated state only lasts for an ‘astronomically’ short while--after a few years or decades, the blister can “heal” and the star shrinks back to its original form. In their recently published study by a team of scientists led by OzGrav postdoctoral researcher Dr Ryosuke Hirai (Monash University), the team carried out hundreds of computer simulations to investigate how companion stars inflate, or swell up, depending on its interaction with a nearby supernova. It was found that the luminosity of inflated stars is only correlated to its mass and doesn’t depend on the strength of the interaction with supernova. The duration of the swelling is also longer when the two stars are closer in distance. “We applied our results to a supernova called SN2006jc, which has a companion star with a low-temperature. If this is in fact an inflated star as we believe, we expect it should rapidly shrink in the next few years,” explains Hirai The number of companion stars detected after supernovae are steadily growing over the years. If scientists can observe an inflated companion star and its contraction, these data correlations can measure the properties of the binary system before the explosion—these insights are extremely rare and important for understanding how massive stars evolve. “We think it’s important to not only find companion stars after supernovae, but to monitor them for a few years to decades to see if it shrinks back,” says Hirai. As featured on Phys.org. Have you heard the joke about how many stars it takes to create a merging binary black hole? Hundreds of thousands in the real world… but only a few, if they’re OzStars and you’re using the latest COMPAS version with machine learning tools.